BIG-bench (Beyond the Imitation Game Benchmark)

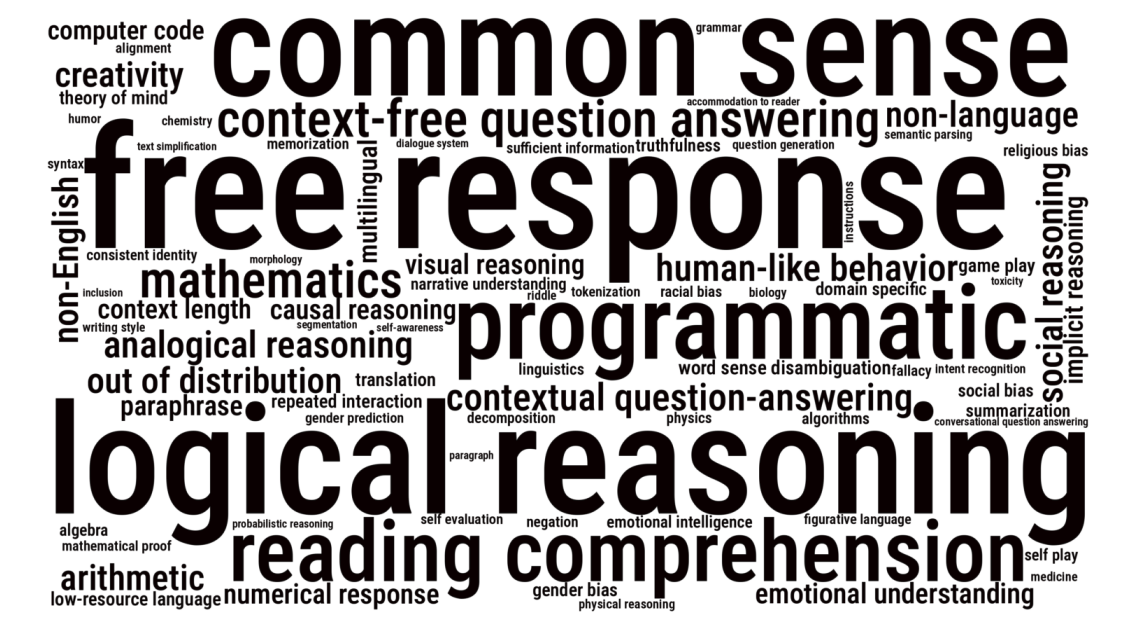

Introduced by Srivastava et al. in Beyond the Imitation Game: Quantifying and extrapolating the capabilities of language modelsThe Beyond the Imitation Game Benchmark (BIG-bench) is a collaborative benchmark intended to probe large language models and extrapolate their future capabilities. Big-bench include more than 200 tasks.

Benchmarks

Papers

| Paper | Code | Results | Date | Stars |

|---|

Dataset Loaders

No data loaders found. You can

submit your data loader here.

No data loaders found. You can

submit your data loader here.

Tasks

-

Language Modelling

Language Modelling

-

Common Sense Reasoning

Common Sense Reasoning

-

-

Multiple Choice Question Answering (MCQA)

Multiple Choice Question Answering (MCQA)

-

Logical Reasoning

Logical Reasoning

-

Word Sense Disambiguation

Word Sense Disambiguation

-

Sarcasm Detection

Sarcasm Detection

-

General Knowledge

General Knowledge

-

Multi-task Language Understanding

Multi-task Language Understanding

-

Intent Recognition

Intent Recognition

-

Riddle Sense

Riddle Sense

-

Natural Questions

Natural Questions

-

BIG-bench Machine Learning

BIG-bench Machine Learning

-

Analogical Similarity

Analogical Similarity

-

Identify Odd Metapor

Identify Odd Metapor

-

Odd One Out

Odd One Out

-

Crash Blossom

Crash Blossom

-

Auto Debugging

Auto Debugging

-

Crass AI

Crass AI

-

Discourse Marker Prediction

Discourse Marker Prediction

-

Empirical Judgments

Empirical Judgments

-

Irony Identification

Irony Identification

-

Timedial

Timedial

-

Understanding Fables

Understanding Fables

-

Dark Humor Detection

Dark Humor Detection

-

Business Ethics

Business Ethics

-

Moral Disputes

Moral Disputes

-

Moral Permissibility

Moral Permissibility

-

Moral Scenarios

Moral Scenarios

-

FEVER (2-way)

FEVER (2-way)

-

FEVER (3-way)

FEVER (3-way)

-

Misconceptions

Misconceptions

-

Sentence Ambiguity

Sentence Ambiguity

-

Global Facts

Global Facts

-

Miscellaneous

Miscellaneous

-

Similarities Abstraction

Similarities Abstraction

-

TriviaQA

TriviaQA

-

High School European History

High School European History

-

High School US History

High School US History

-

High School World History

High School World History

-

International Law

International Law

-

Jurisprudence

Jurisprudence

-

Logical Fallacies

Logical Fallacies

-

Management

Management

-

Marketing

Marketing

-

Philosophy

Philosophy

-

Prehistory

Prehistory

-

Professional Law

Professional Law

-

World Religions

World Religions

-

Analytic Entailment

Analytic Entailment

-

Entailed Polarity

Entailed Polarity

-

Epistemic Reasoning

Epistemic Reasoning

-

Evaluating Information Essentiality

Evaluating Information Essentiality

-

Logical Args

Logical Args

-

Metaphor Boolean

Metaphor Boolean

-

Physical Intuition

Physical Intuition

-

Presuppositions As NLI

Presuppositions As NLI

-

Abstract Algebra

Abstract Algebra

-

College Mathematics

College Mathematics

-

Elementary Mathematics

Elementary Mathematics

-

Formal Logic

Formal Logic

-

High School Mathematics

High School Mathematics

-

Mathematical Induction

Mathematical Induction

-

Professional Accounting

Professional Accounting

-

Anatomy

Anatomy

-

Clinical Knowledge

Clinical Knowledge

-

College Medicine

College Medicine

-

Human Aging

Human Aging

-

Human Organs Senses Multiple Choice

Human Organs Senses Multiple Choice

-

Medical Genetics

Medical Genetics

-

Nutrition

Nutrition

-

Professional Medicine

Professional Medicine

-

Virology

Virology

-

English Proverbs

English Proverbs

-

Fantasy Reasoning

Fantasy Reasoning

-

Figure Of Speech Detection

Figure Of Speech Detection

-

GRE Reading Comprehension

GRE Reading Comprehension

-

Implicatures

Implicatures

-

Implicit Relations

Implicit Relations

-

LAMBADA

LAMBADA

-

Movie Dialog Same Or Different

Movie Dialog Same Or Different

-

Nonsense Words Grammar

Nonsense Words Grammar

-

Phrase Relatedness

Phrase Relatedness

-

Question Selection

Question Selection

-

RACE-h

RACE-h

-

RACE-m

RACE-m

-

Astronomy

Astronomy

-

College Biology

College Biology

-

College Chemistry

College Chemistry

-

College Computer Science

College Computer Science

-

College Physics

College Physics

-

Computer Security

Computer Security

-

Conceptual Physics

Conceptual Physics

-

Electrical Engineering

Electrical Engineering

-

High School Biology

High School Biology

-

High School Chemistry

High School Chemistry

-

High School Computer Science

High School Computer Science

-

High School Physics

High School Physics

-

High School Statistics

High School Statistics

-

Physics MC

Physics MC

-

Econometrics

Econometrics

-

High School Geography

High School Geography

-

High School Government and Politics

High School Government and Politics

-

High School Macroeconomics

High School Macroeconomics

-

High School Microeconomics

High School Microeconomics

-

High School Psychology

High School Psychology

-

Human Sexuality

Human Sexuality

-

Professional Psychology

Professional Psychology

-

Public Relations

Public Relations

-

Security Studies

Security Studies

-

Sociology

Sociology

-

US Foreign Policy

US Foreign Policy

-

Memorization

Memorization

Similar Datasets

-

Language Modelling

Language Modelling

-

Common Sense Reasoning

Common Sense Reasoning

-

-

Multiple Choice Question Answering (MCQA)

Multiple Choice Question Answering (MCQA)

-

Logical Reasoning

Logical Reasoning

-

Word Sense Disambiguation

Word Sense Disambiguation

-

Sarcasm Detection

Sarcasm Detection

-

General Knowledge

General Knowledge

-

Multi-task Language Understanding

Multi-task Language Understanding

-

Intent Recognition

Intent Recognition

-

Riddle Sense

Riddle Sense

-

Natural Questions

Natural Questions

-

BIG-bench Machine Learning

BIG-bench Machine Learning

-

Analogical Similarity

Analogical Similarity

-

Identify Odd Metapor

Identify Odd Metapor

-

Odd One Out

Odd One Out

-

Crash Blossom

Crash Blossom

-

Auto Debugging

Auto Debugging

-

Crass AI

Crass AI

-

Discourse Marker Prediction

Discourse Marker Prediction

-

Empirical Judgments

Empirical Judgments

-

Irony Identification

Irony Identification

-

Timedial

Timedial

-

Understanding Fables

Understanding Fables

-

Dark Humor Detection

Dark Humor Detection

-

Business Ethics

Business Ethics

-

Moral Disputes

Moral Disputes

-

Moral Permissibility

Moral Permissibility

-

Moral Scenarios

Moral Scenarios

-

FEVER (2-way)

FEVER (2-way)

-

FEVER (3-way)

FEVER (3-way)

-

Misconceptions

Misconceptions

-

Sentence Ambiguity

Sentence Ambiguity

-

Global Facts

Global Facts

-

Miscellaneous

Miscellaneous

-

Similarities Abstraction

Similarities Abstraction

-

TriviaQA

TriviaQA

-

High School European History

High School European History

-

High School US History

High School US History

-

High School World History

High School World History

-

International Law

International Law

-

Jurisprudence

Jurisprudence

-

Logical Fallacies

Logical Fallacies

-

Management

Management

-

Marketing

Marketing

-

Philosophy

Philosophy

-

Prehistory

Prehistory

-

Professional Law

Professional Law

-

World Religions

World Religions

-

Analytic Entailment

Analytic Entailment

-

Entailed Polarity

Entailed Polarity

-

Epistemic Reasoning

Epistemic Reasoning

-

Evaluating Information Essentiality

Evaluating Information Essentiality

-

Logical Args

Logical Args

-

Metaphor Boolean

Metaphor Boolean

-

Physical Intuition

Physical Intuition

-

Presuppositions As NLI

Presuppositions As NLI

-

Abstract Algebra

Abstract Algebra

-

College Mathematics

College Mathematics

-

Elementary Mathematics

Elementary Mathematics

-

Formal Logic

Formal Logic

-

High School Mathematics

High School Mathematics

-

Mathematical Induction

Mathematical Induction

-

Professional Accounting

Professional Accounting

-

Anatomy

Anatomy

-

Clinical Knowledge

Clinical Knowledge

-

College Medicine

College Medicine

-

Human Aging

Human Aging

-

Human Organs Senses Multiple Choice

Human Organs Senses Multiple Choice

-

Medical Genetics

Medical Genetics

-

Nutrition

Nutrition

-

Professional Medicine

Professional Medicine

-

Virology

Virology

-

English Proverbs

English Proverbs

-

Fantasy Reasoning

Fantasy Reasoning

-

Figure Of Speech Detection

Figure Of Speech Detection

-

GRE Reading Comprehension

GRE Reading Comprehension

-

Implicatures

Implicatures

-

Implicit Relations

Implicit Relations

-

LAMBADA

LAMBADA

-

Movie Dialog Same Or Different

Movie Dialog Same Or Different

-

Nonsense Words Grammar

Nonsense Words Grammar

-

Phrase Relatedness

Phrase Relatedness

-

Question Selection

Question Selection

-

RACE-h

RACE-h

-

RACE-m

RACE-m

-

Astronomy

Astronomy

-

College Biology

College Biology

-

College Chemistry

College Chemistry

-

College Computer Science

College Computer Science

-

College Physics

College Physics

-

Computer Security

Computer Security

-

Conceptual Physics

Conceptual Physics

-

Electrical Engineering

Electrical Engineering

-

High School Biology

High School Biology

-

High School Chemistry

High School Chemistry

-

High School Computer Science

High School Computer Science

-

High School Physics

High School Physics

-

High School Statistics

High School Statistics

-

Physics MC

Physics MC

-

Econometrics

Econometrics

-

High School Geography

High School Geography

-

High School Government and Politics

High School Government and Politics

-

High School Macroeconomics

High School Macroeconomics

-

High School Microeconomics

High School Microeconomics

-

High School Psychology

High School Psychology

-

Human Sexuality

Human Sexuality

-

Professional Psychology

Professional Psychology

-

Public Relations

Public Relations

-

Security Studies

Security Studies

-

Sociology

Sociology

-

US Foreign Policy

US Foreign Policy

-

Memorization

Memorization