Depth Estimation

799 papers with code • 14 benchmarks • 70 datasets

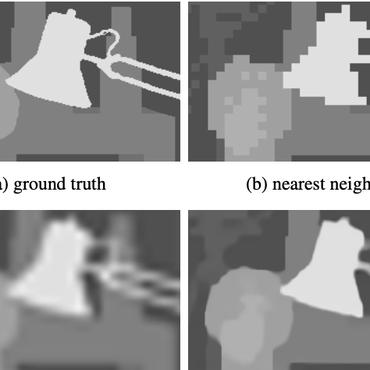

Depth Estimation is the task of measuring the distance of each pixel relative to the camera. Depth is extracted from either monocular (single) or stereo (multiple views of a scene) images. Traditional methods use multi-view geometry to find the relationship between the images. Newer methods can directly estimate depth by minimizing the regression loss, or by learning to generate a novel view from a sequence. The most popular benchmarks are KITTI and NYUv2. Models are typically evaluated according to a RMS metric.

Libraries

Use these libraries to find Depth Estimation models and implementationsSubtasks

Most implemented papers

High Quality Monocular Depth Estimation via Transfer Learning

Accurate depth estimation from images is a fundamental task in many applications including scene understanding and reconstruction.

Deeper Depth Prediction with Fully Convolutional Residual Networks

This paper addresses the problem of estimating the depth map of a scene given a single RGB image.

Unsupervised Monocular Depth Estimation with Left-Right Consistency

Learning based methods have shown very promising results for the task of depth estimation in single images.

Vision Transformers for Dense Prediction

We introduce dense vision transformers, an architecture that leverages vision transformers in place of convolutional networks as a backbone for dense prediction tasks.

Digging Into Self-Supervised Monocular Depth Estimation

Per-pixel ground-truth depth data is challenging to acquire at scale.

Towards Robust Monocular Depth Estimation: Mixing Datasets for Zero-shot Cross-dataset Transfer

In particular, we propose a robust training objective that is invariant to changes in depth range and scale, advocate the use of principled multi-objective learning to combine data from different sources, and highlight the importance of pretraining encoders on auxiliary tasks.

From Big to Small: Multi-Scale Local Planar Guidance for Monocular Depth Estimation

We show that the proposed method outperforms the state-of-the-art works with significant margin evaluating on challenging benchmarks.

Depth Prediction Without the Sensors: Leveraging Structure for Unsupervised Learning from Monocular Videos

Models and examples built with TensorFlow

Efficient Attention: Attention with Linear Complexities

Dot-product attention has wide applications in computer vision and natural language processing.

What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?

On the other hand, epistemic uncertainty accounts for uncertainty in the model -- uncertainty which can be explained away given enough data.

Cityscapes

Cityscapes

KITTI

KITTI

ScanNet

ScanNet

NYUv2

NYUv2

Matterport3D

Matterport3D

Middlebury

Middlebury

TUM RGB-D

TUM RGB-D

SUNCG

SUNCG

Taskonomy

Taskonomy

2D-3D-S

2D-3D-S