Image-to-Image Translation

491 papers with code • 37 benchmarks • 29 datasets

Image-to-Image Translation is a task in computer vision and machine learning where the goal is to learn a mapping between an input image and an output image, such that the output image can be used to perform a specific task, such as style transfer, data augmentation, or image restoration.

( Image credit: Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks )

Libraries

Use these libraries to find Image-to-Image Translation models and implementationsDatasets

Subtasks

Most implemented papers

Deep Residual Learning for Image Recognition

Deep residual nets are foundations of our submissions to ILSVRC & COCO 2015 competitions, where we also won the 1st places on the tasks of ImageNet detection, ImageNet localization, COCO detection, and COCO segmentation.

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

Image-to-image translation is a class of vision and graphics problems where the goal is to learn the mapping between an input image and an output image using a training set of aligned image pairs.

Image-to-Image Translation with Conditional Adversarial Networks

We investigate conditional adversarial networks as a general-purpose solution to image-to-image translation problems.

StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation

To address this limitation, we propose StarGAN, a novel and scalable approach that can perform image-to-image translations for multiple domains using only a single model.

U-GAT-IT: Unsupervised Generative Attentional Networks with Adaptive Layer-Instance Normalization for Image-to-Image Translation

We propose a novel method for unsupervised image-to-image translation, which incorporates a new attention module and a new learnable normalization function in an end-to-end manner.

Semantic Image Synthesis with Spatially-Adaptive Normalization

Previous methods directly feed the semantic layout as input to the deep network, which is then processed through stacks of convolution, normalization, and nonlinearity layers.

High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs

We present a new method for synthesizing high-resolution photo-realistic images from semantic label maps using conditional generative adversarial networks (conditional GANs).

Multimodal Unsupervised Image-to-Image Translation

To translate an image to another domain, we recombine its content code with a random style code sampled from the style space of the target domain.

StarGAN v2: Diverse Image Synthesis for Multiple Domains

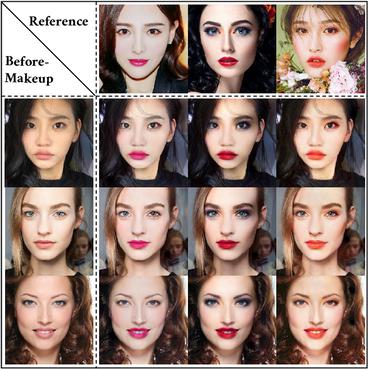

A good image-to-image translation model should learn a mapping between different visual domains while satisfying the following properties: 1) diversity of generated images and 2) scalability over multiple domains.

Everybody Dance Now

This paper presents a simple method for "do as I do" motion transfer: given a source video of a person dancing, we can transfer that performance to a novel (amateur) target after only a few minutes of the target subject performing standard moves.

Cityscapes

Cityscapes

KITTI

KITTI

ADE20K

ADE20K

CelebA-HQ

CelebA-HQ

SYNTHIA

SYNTHIA

GTA5

GTA5

DeepFashion

DeepFashion

Perceptual Similarity

Perceptual Similarity

AFHQ

AFHQ

COCO-Stuff

COCO-Stuff