NYUv2 (NYU-Depth V2)

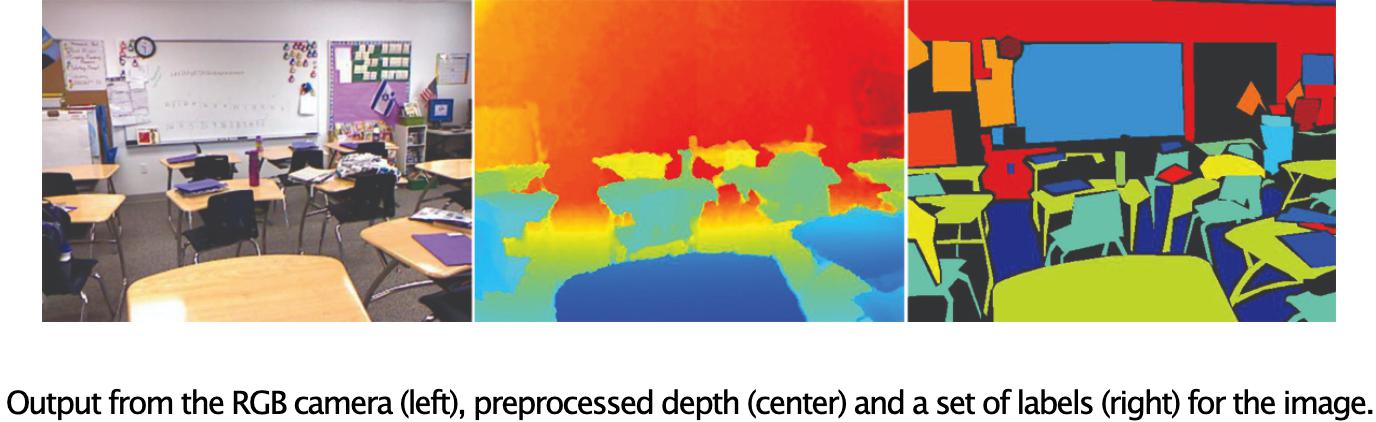

Introduced by Nathan Silberman et al. in Indoor Segmentation and Support Inference from RGBD ImagesThe NYU-Depth V2 data set is comprised of video sequences from a variety of indoor scenes as recorded by both the RGB and Depth cameras from the Microsoft Kinect. It features:

- 1449 densely labeled pairs of aligned RGB and depth images

- 464 new scenes taken from 3 cities

- 407,024 new unlabeled frames

- Each object is labeled with a class and an instance number. The dataset has several components:

- Labeled: A subset of the video data accompanied by dense multi-class labels. This data has also been preprocessed to fill in missing depth labels.

- Raw: The raw RGB, depth and accelerometer data as provided by the Kinect.

- Toolbox: Useful functions for manipulating the data and labels.

Benchmarks

Papers

| Paper | Code | Results | Date | Stars |

|---|

Dataset Loaders

Tasks

-

Semantic Segmentation

Semantic Segmentation

-

Instance Segmentation

Instance Segmentation

-

3D Object Detection

3D Object Detection

-

Depth Estimation

Depth Estimation

-

Panoptic Segmentation

Panoptic Segmentation

-

Monocular Depth Estimation

Monocular Depth Estimation

-

Scene Segmentation

Scene Segmentation

-

Multi-Task Learning

Multi-Task Learning

-

Depth Completion

Depth Completion

-

Real-Time Semantic Segmentation

Real-Time Semantic Segmentation

-

Surface Normals Estimation

Surface Normals Estimation

-

3D Semantic Scene Completion

3D Semantic Scene Completion

-

Boundary Detection

Boundary Detection

-

3D Semantic Scene Completion from a single RGB image

3D Semantic Scene Completion from a single RGB image

-

Surface Normal Estimation

Surface Normal Estimation

-

Scene Classification (unified classes)

Scene Classification (unified classes)

-

Plane Instance Segmentation

Plane Instance Segmentation

-

Zero-shot Scene Classification (unified classes)

Zero-shot Scene Classification (unified classes)

Similar Datasets

-

Semantic Segmentation

Semantic Segmentation

-

Instance Segmentation

Instance Segmentation

-

3D Object Detection

3D Object Detection

-

Depth Estimation

Depth Estimation

-

Panoptic Segmentation

Panoptic Segmentation

-

Monocular Depth Estimation

Monocular Depth Estimation

-

Scene Segmentation

Scene Segmentation

-

Multi-Task Learning

Multi-Task Learning

-

Depth Completion

Depth Completion

-

Real-Time Semantic Segmentation

Real-Time Semantic Segmentation

-

Surface Normals Estimation

Surface Normals Estimation

-

3D Semantic Scene Completion

3D Semantic Scene Completion

-

Boundary Detection

Boundary Detection

-

3D Semantic Scene Completion from a single RGB image

3D Semantic Scene Completion from a single RGB image

-

Surface Normal Estimation

Surface Normal Estimation

-

Scene Classification (unified classes)

Scene Classification (unified classes)

-

Plane Instance Segmentation

Plane Instance Segmentation

-

Zero-shot Scene Classification (unified classes)

Zero-shot Scene Classification (unified classes)