Policy Gradient Methods

Policy Gradient Methods

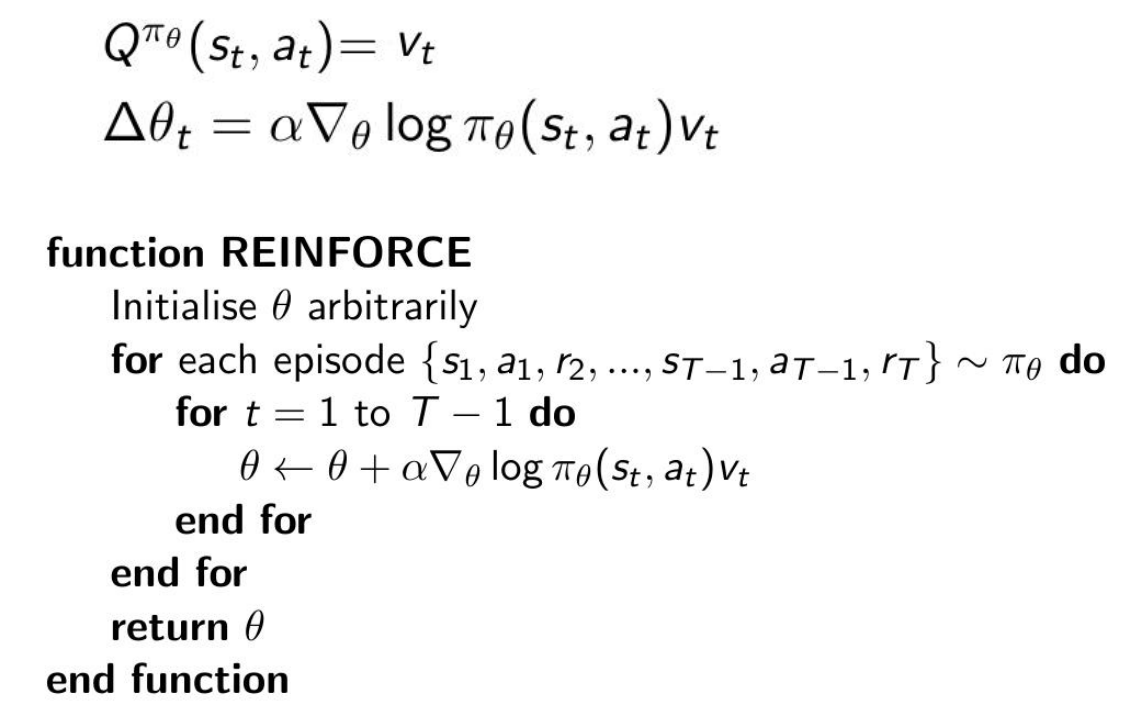

REINFORCE

REINFORCE is a Monte Carlo variant of a policy gradient algorithm in reinforcement learning. The agent collects samples of an episode using its current policy, and uses it to update the policy parameter $\theta$. Since one full trajectory must be completed to construct a sample space, it is updated as an off-policy algorithm.

$$ \nabla_{\theta}J\left(\theta\right) = \mathbb{E}_{\pi}\left[G_{t}\nabla_{\theta}\ln\pi_{\theta}\left(A_{t}\mid{S_{t}}\right)\right]$$

Image Credit: Tingwu Wang

Papers

| Paper | Code | Results | Date | Stars |

|---|

Tasks

| Task | Papers | Share |

|---|---|---|

| Reinforcement Learning (RL) | 51 | 23.83% |

| Sentence | 8 | 3.74% |

| Text Generation | 8 | 3.74% |

| Image Classification | 7 | 3.27% |

| Question Answering | 6 | 2.80% |

| Decision Making | 5 | 2.34% |

| Recommendation Systems | 5 | 2.34% |

| Image Captioning | 5 | 2.34% |

| Retrieval | 4 | 1.87% |

Usage Over Time

Components

| Component | Type |

|

|---|---|---|

| 🤖 No Components Found | You can add them if they exist; e.g. Mask R-CNN uses RoIAlign |