Trending Research

Moving Object Segmentation: All You Need Is SAM (and Flow)

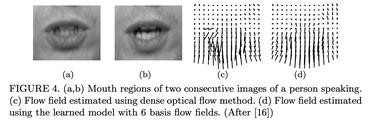

The objective of this paper is motion segmentation -- discovering and segmenting the moving objects in a video.

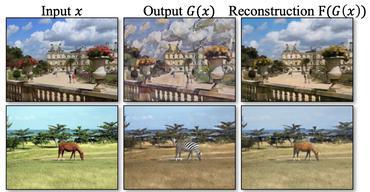

SEED-X: Multimodal Models with Unified Multi-granularity Comprehension and Generation

We hope that our work will inspire future research into what can be achieved by versatile multimodal foundation models in real-world applications.

AutoWebGLM: Bootstrap And Reinforce A Large Language Model-based Web Navigating Agent

Large language models (LLMs) have fueled many intelligent agent tasks, such as web navigation -- but most existing agents perform far from satisfying in real-world webpages due to three factors: (1) the versatility of actions on webpages, (2) HTML text exceeding model processing capacity, and (3) the complexity of decision-making due to the open-domain nature of web.

Magic Clothing: Controllable Garment-Driven Image Synthesis

We propose Magic Clothing, a latent diffusion model (LDM)-based network architecture for an unexplored garment-driven image synthesis task.

Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction

We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolution prediction", diverging from the standard raster-scan "next-token prediction".

Ranked #7 on

Image Generation

on ImageNet 256x256

Ranked #7 on

Image Generation

on ImageNet 256x256

Llama 2: Open Foundation and Fine-Tuned Chat Models

In this work, we develop and release Llama 2, a collection of pretrained and fine-tuned large language models (LLMs) ranging in scale from 7 billion to 70 billion parameters.

Ranked #2 on

Question Answering

on PubChemQA

Ranked #2 on

Question Answering

on PubChemQA

Assisting in Writing Wikipedia-like Articles From Scratch with Large Language Models

We study how to apply large language models to write grounded and organized long-form articles from scratch, with comparable breadth and depth to Wikipedia pages.

AgentKit: Flow Engineering with Graphs, not Coding

The chains of nodes can be designed to explicitly enforce a naturally structured "thought process".

Multi-Session SLAM with Differentiable Wide-Baseline Pose Optimization

The backbone is trained end-to-end using a novel differentiable solver for wide-baseline two-view pose.

Towards Large Language Models as Copilots for Theorem Proving in Lean

In this paper, we explore LLMs as copilots that assist humans in proving theorems.